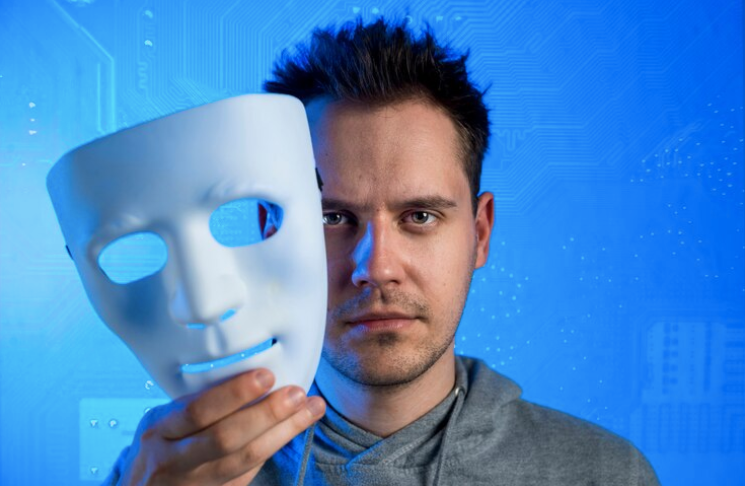

Technology changes every passing day and the line between reality and fiction is becoming increasingly blurred, thanks to a technological phenomenon known as deep fakes. Leveraging the power of artificial intelligence (AI), deepfakes can create astonishingly realistic images, videos, and audio recordings of people doing or saying things they never actually did. While this technology holds promise for various legitimate applications, it also raises significant ethical, legal, and security concerns. This article delves into what deep fakes are, how they are created, their potential uses, and the challenges they pose.

What Are Deepfakes?

Deepfakes are synthetic media in which a person in an existing image or video is replaced with someone else’s likeness. The term “deepfake” is derived from “deep learning,” a subset of AI, and “fake,” reflecting the technology’s ability to create highly convincing fabrications. Unlike traditional forms of digital manipulation, which often require significant skill and time, deepfakes utilize sophisticated algorithms to automate and enhance the process. Also, advanced technology deep fake highly impacted on our personal life.

How Are Deepfakes Created?

The creation of deep fakes primarily involves two types of neural networks: Generative Adversarial Networks (GANs) and autoencoders.

- Generative Adversarial Networks (GANs): GANs consist of two neural networks—the generator and the discriminator—that work in tandem. The generator creates fake images or videos, while the discriminator evaluates their authenticity. Through this iterative process, the generator improves its output until the fakes are indistinguishable from real media.

- Autoencoders: Autoencoders are used to compress and reconstruct images. In the context of deepfakes, autoencoders can encode a person’s facial features and then decode them onto another person’s face, allowing for seamless video manipulations.

The deepfake creation process typically involves the following steps:

- Data Collection: Gathering a substantial amount of video footage or images of the target individual to train the AI model.

- Training the Model: Feeding the collected data into the neural networks to learn the person’s facial features, expressions, and movements.

- Generation: Using the trained model to overlay the target’s likeness onto another person’s face in a video or image.

Potential Uses of Deepfakes

While deepfakes are often associated with malicious activities, they also have legitimate applications:

- Entertainment and Media: In movies and TV shows, deepfakes can be used to create realistic special effects, age or de-age actors, or even bring deceased actors back to the screen.

- Education and Training: Deepfakes can generate realistic simulations for training purposes, such as creating lifelike scenarios for medical or military training.

- Personalization: Customized content, such as personalized videos or messages, can be created for marketing or entertainment purposes.

Challenges and Risks of Deepfakes

Despite their potential benefits, deepfakes pose several significant challenges and risks:

- Misinformation and Fake News: Deepfakes can be used to spread false information and create fake news, potentially influencing public opinion and sowing discord.

- Privacy Violations: Individuals can become victims of deepfake technology, with their likenesses used without consent in fake videos or images that can be damaging or defamatory.

- Fraud and Identity Theft: Deep fakes can facilitate fraud and identity theft by creating convincing impersonations, leading to financial and reputational harm.

- Erosion of Trust: The prevalence of deepfakes can erode trust in digital media, making it difficult to discern what is real and what is not.

Mitigating the Risks

Addressing the threats posed by deep fakes requires a multifaceted approach involving technology, legislation, and public awareness:

- Detection Technology: Researchers are developing advanced tools to detect deepfakes. These tools analyze inconsistencies in the media, such as unnatural facial movements or anomalies in the metadata, to identify fakes.

- Legislation: Governments need to enact laws that address the creation and distribution of malicious deepfakes. Legal frameworks can provide recourse for victims and deter potential abusers.

- Public Awareness: Educating the public about the existence and risks of deepfakes is crucial. People should be trained to critically evaluate digital content and recognize potential fakes.

- Ethical Standards: The tech industry should establish ethical standards for the use of AI and deepfake technology, promoting responsible practices and discouraging misuse.

Conclusion

Deepfakes represent a groundbreaking advancement in digital media manipulation, with the potential for both beneficial and harmful applications. Understanding what deepfakes are, how they are created, and the risks they pose is essential for navigating the digital landscape. By fostering technological innovation, implementing robust legal frameworks, and raising public awareness, we can harness the positive potential of deepfakes while mitigating their negative impacts. As we continue to grapple with this powerful technology, vigilance, and ethical considerations will be key to ensuring that deepfakes are used responsibly.